Is your LLM use compliant?

Foundation Models (FMs) mark the beginning of a new era in machine learning and artificial intelligence. We can enable many new use-cases on them. Driven by models such as GPT-4 and Stable Diffusion, the hype in the industry is palpable.

But if you want to use foundation models in your company, there are two big questions to answer. Does the model allow commercial use? Is the model compliant with the new EU AI Regulation?

What are foundation models?

Let’s start with some definitions, so that we understand what we talk about.

Foundation models (FM) are AI models trained on large quantities of unlabeled data. They are usually trained through self-supervised learning. This process results in generalized models capable of a wide variety of tasks with remarkable accuracy.

Data scientists can build upon generalised foundation models via a process called “fine-tuning”. This way they can create custom versions with domain-specific training data. These fine-tuned models are better at some particular task.

That’s where the “foundation” in foundation models comes in. The “fine-tuning” approach enhances their performance, at a lower cost than training the model from scratch.

The foundation models have inherent limitations. When a task exceeds a foundation model’s capabilities, it can return an incorrect answer. This is the so called “hallucination” you have heard about. Those errors appear as plausible as a correct response to an untrained eye. Only an expert can discern between them, making it a serious issue in some tasks.

The problem foundation models solve

Why have foundation models become so popular? The previous section hinted at it, but let’s explain it in more detail.

New AI systems are helping solve many real-world problems. But creating and deploying each new system requires a considerable amount of resources.

If you want to use AI in a new domain, you need to ensure that there is a well-labelled and large-enough dataset for the task. If you are innovating, a dataset will often not exist. You’ll have to spend thousands of hours finding and labelling appropriate data for the dataset.

Then you need to train your model, a task that requires a lot of expensive resources (GPUs) and time. For example, is it estimated that GPT-3 took around 1,000 GPU and 3 months of time, for a cost of more than $4 million. And that is not the latest model.

Using foundation models, you can replace both the creation of the dataset and the training by fine-tunning, which is much faster and cheaper to do. Provided, that is, that your foundation model allows you to do so.

The licensing problem

The first issue you will come across when using a foundation model is that the model doesn’t allow commercial use. Many of the foundation models available are coming from research institutions. Research institutions have different incentives and often add this restriction.

Let’s look at a well-known model as an example: LLaMA. LLaMA is the foundation model created by Meta. Meta is, obviously, not a research institution. Despite this, Meta released the source code, but restricted access to the weights of the model. Not only that, at the time of this writing the model can’t be used for commercial purposes.

Not long after publication there was a leak of the weights. This opened the door to other implementations of the model, like Open-LLaMA. They may perform similarly to LLaMA and you can use them as they have permissive licenses. But you can’t use LLaMA itself.

Not all companies restrict the models this way. As an example, you have Falcon. This is a newer open-source model, licensed under Apache 2.0. At the time of this writing, it outperforms LLaMA in some tests. And the license, Apache 2.0, allows for commercial use.

On the face of it, this seems to be a simple problem to tackle. Choose a model that performs well and has a permissive license. Potentially, one of the Open LLM Leaderboard. Do some fine-tunning on top of it, and you are set.

In practice, there are some complications. For example, Falcon is a model trained mostly on English, German, Spanish, and French. It doesn’t generalise well to other languages. This makes it unsuitable for companies targetting the Asian market, for example.

Other models seem to rely on text generated by models like GPT-3.5 or GPT-4 for their training. This means that they may include biases and alignment towards certain outcomes. This can reduce their effectiveness for some use cases.

Choosing a model becomes a matter for finding a foundational model that has a license for commercial purposes, but also can do the task you need.

The EU AI directive

The European Union (EU) is finalizing its AI Act as the world’s first comprehensive regulation to govern AI. The Act includes explicit obligations for foundation model providers. This can have a direct impact in your choice of foundational model.

A provider that falls outside regulation may be forced to stop providing the model. This can happen at short notice, risking the business of the users of that model.

As a result, this means that choosing a foundation model has a third angle: compliance with the AI Act. That, of course, alongside its license implications and training suitability for the business use case.

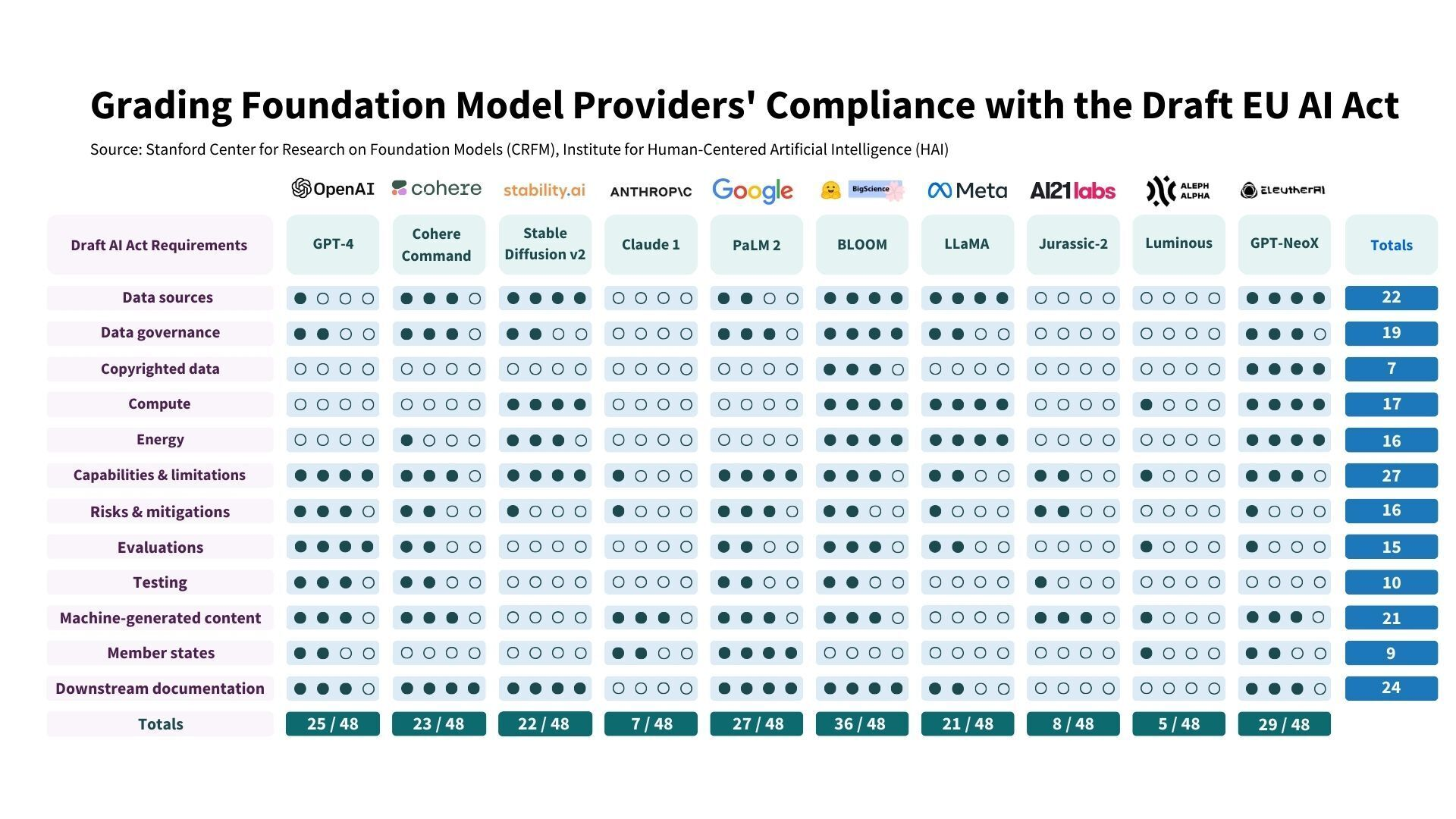

Unfortunately, the news are not great at this time. A study by Stanford shows that no foundation model is currently compliant. We recommend reading the article.

The good news are that the issues are solvable, and the AI Act remains under discussion. Probably, by the time it gets published the main foundation models will be compliant.

How some popular models fare

Given all the above, the natural question is: can I use a particular model? Given the amount of OSS models available (see Hugging Face Leaderboard for an example) it is hard to build a comprehensive list.

But we have created a table with some popular models which may help you navigate this question. The list is correct at the time of the writing:

| Name (and link) | License |

|---|---|

| LLaMA | Not for commercial purposes |

| llama.cpp | Depends on the model chosen |

| OpenLLaMA | Apache 2.0 License |

| lit-LLaMA | Apache 2.0 License |

| GPT4All | MIT License |

| WizardLM | Not for commercial purposes |

| StableLM | Apache 2.0 License |

| MPT | Offers commercial License |

| OpenAI | Offers commercial License |

| Falcon | Apache 2.0 License |

| Claude | Offers commercial License |

| PaLM API | Offers commercial License |

| XGen | Apache 2.0 License |

Please note that the list doesn’t state restrictions of a model, like support for oriental languages. If you have particular needs for your use case, you should check the model training information.

Conclusion

Choosing the right foundational model for your company is not a trivial task. You need to consider their license, their training restrictions, and the compliance implications of the model. It is a tedious task that requires checking foundational models you want to use one by one. But not doing so could have serious consequences for your business.